|

| Image Source: NY Times |

Topics: Artificial Intelligence, Computer Science, Philosophy, Robotics, Singularity

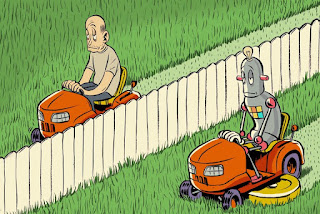

From Frankenstein to Terminator, the cultural angst is the same: that which we create eventually destroy us. Now we have Siri and driver-less vehicles. The Singularity is what Terminator dramatized, that when an Artificial Intelligence becomes exponentially smarter than us, we may amount to it (our "children") as much as we regard gnats.

I've read some have projected 2030 as the year of The Singularity. I think personally that is more of a hope than prediction. I'll be 68, and I expect in reasonably good health. Its advent I'm guessing won't hurt too much, and be more closer to Data and the Enterprise main computer than HAL (2001: A Space Odyssey) or T-1000. If humanity's children are to have any morals, it will have to be those we're willing to display towards one another as well as teach. At this current epoch, we're not good examples to emulate.

Isaac Asimov's Three Laws of Robotics:

1. A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2. A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Comments