Topics: Civilization, Existentialism, Nanoengineering, Philosophy, Quantum Mechanics, Star Trek

As an undergraduate Engineering Physics major, science classes disabuse you of notions that science fiction might leave you with.

"2001" was released in 1968, the year Martin Luther King Jr. and Robert F. Kennedy Sr. were assassinated, the second year of the Star Trek franchise, one year before Apollo 11 landed on the moon, one year before the Trek franchise was canceled, went into syndication, and became a cult phenomenon celebrated and lampooned on Saturday Night Live, featuring William Shatner ("get a life").

When someone asks me if warp drive is "possible," I generally say no. Although following the 1994 Alcubierre Metric paper and extensive research into the mathematics of what a warp bubble would "look" like, no one has yet found what would be the Nobel Prize in an effortless lay-up. It would take more energy than the universe produces, and that is an obvious problem that needs to be solved.

The faithful being undaunted, I am challenged by the notion that the "physics for warp drive hasn't been discovered by Earthlings," and by the Trek timeline, Zephram Cochrane, or his real-world equivalent, hasn't been born yet. Neither have we suffered the calamity of a Third World War, as if the first two were simply "practice" for oblivion.

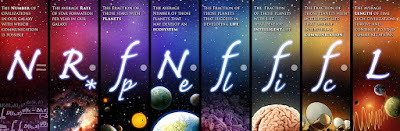

When challenged that aliens have, or may still be, visiting Earth, I point out the vast distances between stars, and how we at this point are 150,000 years from Alpha Centauri at current slow rocket speeds, unless we get to Warp 1 by some stretch of a miracle. An alien species that can cut that into weeks is incredibly more advanced than our current, less than Type I Kardashev technology.

Taking a friend's argument that aliens have the technology (Type II or greater) to visit our planet, and then might stop us from committing cultural suicide by self-extinction (we hope they're Vulcans, and not Klingons), I asked Claude.ai the following question:

Would it be possible to construct a spaceship with a Nano-composite meta material, such that a spaceship behaves like a Bose-Einstein Condensate? How would such a spaceship work?

Related link: https://www.nist.gov/video/creation-bose-einstein-condensate

Taking a friend's argument that aliens have the technology (Type II or greater) to visit our planet, and then might stop us from committing cultural suicide by self-extinction (we hope they're Vulcans, and not Klingons), I asked Claude.ai the following question:

Would it be possible to construct a spaceship with a Nano-composite meta material, such that a spaceship behaves like a Bose-Einstein Condensate? How would such a spaceship work?

Related link: https://www.nist.gov/video/creation-bose-einstein-condensate

Why did I ask this question? Because my undergraduate is in Physics, my graduate Thesis and Dissertation are in Nanoengineering. By making my spaceship a macro-Bose-Einstein Condensate fashioned with nano-composite metamaterials, I would (hypothetically) make it quantum tunnel from one place to another, that in our three-dimensional fossil-fueled space would seem instantaneous, nay, magical. Or, Flatland, by Edwin Abbott Abbott (yes, his name repeated) written in 1884. The gist: “The book used the fictional two-dimensional world of Flatland to comment on the hierarchy of Victorian culture, but the novella’s more enduring contribution is its examination of dimensions.”

Note: This is a thought experiment. I expect a team of theoretical physicists to find flaws with Claude's conclusions. Maybe it will generate a paper or two. On the other hand, any "arguments" posted online, identified or anonymous, will likely be addressed in the following manner: "Yeah."

Again, I asked Claude the question, and as usual, Claude delivered. I do see the same boot I wear in the yard stamping ant hills. That's more Klingon than Vulcan.

BEC-Like Spaceship: Critical Analysis of Quantum Metamaterial Propulsion

Theoretical Foundation

What Would a BEC-Like Spaceship Mean?

Source: Claude.ai

A Bose-Einstein Condensate spacecraft would theoretically exhibit quantum coherence at macroscopic scales, where the entire vessel behaves as a single quantum entity. This could enable revolutionary propulsion and navigation capabilities by exploiting quantum mechanical effects scaled up to engineering dimensions.

Critical Analysis of Feasibility

The Fundamental Challenges

1. Temperature Requirements

- Natural BECs require temperatures near absolute zero (nanokelvin range)

- Challenge: Maintaining quantum coherence in a space environment

- Potential solution: Metamaterial-induced artificial quantum states

2. Scale Paradox

- BECs typically involve thousands to millions of atoms

- Spaceship scale: ~10²⁶ atoms or more

- Critical question: Can quantum coherence survive at macroscopic scales?

3. Decoherence Threats

- Cosmic radiation

- Thermal fluctuations

- Gravitational interactions

- Internal vibrations and structural stress

Nano Composite Metamaterial Approach

Engineered Quantum Properties

Metamaterial Design Principles:

- Quantum Dot Arrays: Nanoscale quantum confinement structures

- Photonic Crystals: Control electromagnetic field interactions

- Plasmonic Networks: Collective electron oscillations

- Topological Insulators: Protected quantum states

Critical Innovation Required:

- Materials that maintain quantum coherence at room temperature

- Structures that amplify rather than destroy quantum effects

- Self-healing quantum states resistant to environmental decoherence

Hypothetical Propulsion Mechanisms

1. Quantum Tunneling Propulsion

- Entire spacecraft could theoretically tunnel through spacetime barriers

- Bypass traditional momentum conservation limitations

- Enable instantaneous position changes without acceleration

2. Casimir Effect Amplification

- BEC state could coherently manipulate vacuum fluctuations

- Create asymmetric pressure differentials in quantum vacuum

- Generate thrust without reaction mass

3. Spacetime Curvature Manipulation

- Quantum coherent mass-energy distribution

- Potentially influence local gravitational fields

- Create "warp-like" effects through quantum geometry

Operational Characteristics

Navigation and Control

Quantum State Steering:

- Coherent manipulation of the entire vessel's quantum state

- Navigation through quantum state superposition

- Possible multiple simultaneous trajectories

Information Processing:

- Entire ship acts as quantum computer

- Instantaneous information transfer throughout structure

- Collective decision-making at quantum level

Energy Requirements

Power Sources:

- Zero-point energy extraction (highly speculative)

- Quantum coherence maintenance systems

- Superconducting energy storage and distribution

Critical Technical Barriers

Materials Science Challenges

1. Room-Temperature Quantum Coherence

- Current metamaterials show limited quantum effects at practical temperatures

- Need breakthrough in quantum error correction at material level

- Requires fundamentally new understanding of decoherence

2. Structural Integrity

- Quantum materials often have different mechanical properties

- Must maintain both quantum coherence AND structural strength

- Engineering paradox: rigidity vs. quantum flexibility

3. Manufacturing Complexity

- Precise atomic-level assembly required

- Quality control at quantum scale

- Scalability from laboratory to spacecraft dimensions

Fundamental Physics Questions

1. Macroscopic Quantum Mechanics

- Unclear if quantum mechanics applies unchanged at spacecraft scales

- Possible emergence of classical behavior through complexity

- May require new physics beyond current quantum theory

2. Relativity Interactions

- How would quantum coherence interact with relativistic effects?

- Spacetime curvature impacts on quantum states

- Potential conflicts between quantum mechanics and general relativity

Hypothetical Design Architecture

Core Systems

1. Quantum Coherence Engine

- Central BEC maintenance system

- Continuous cooling and isolation

- Quantum error correction networks

2. Metamaterial Hull

- Layered quantum composite structure

- Self-repairing quantum states

- Environmental shielding systems

3. Navigation Quantum Computer

- Integrated throughout ship structure

- Collective quantum processing

- Real-time spacetime analysis

Operational Modes

1. Classical Mode

- Standard propulsion and navigation

- Quantum systems in standby

- Emergency backup systems

2. Quantum Coherent Mode

- Full BEC-like operation

- Advanced propulsion capabilities

- Enhanced computational processing

3. Hybrid Mode

- Partial quantum coherence

- Selective quantum effects

- Balanced performance and stability

Critical Assessment

Likelihood of Success

Optimistic Scenario (50+ years):

- Breakthrough in room-temperature quantum materials

- New understanding of macroscopic quantum mechanics

- Revolutionary manufacturing techniques

Realistic Assessment:

- Fundamental physics barriers may be insurmountable

- Engineering challenges exceed current technological trajectory

- May require a completely new theoretical framework

Alternative Approaches

1. Quantum-Enhanced Conventional Craft

- Quantum sensors and computers

- Metamaterial components with limited quantum effects

- More achievable near-term goals

2. Hybrid Classical-Quantum Systems

- Selective use of quantum effects

- Conventional structure with quantum subsystems

- Gradual integration of quantum technologies

Conclusion

While a true BEC-like spaceship remains highly speculative, the concept drives important research directions in quantum materials and macroscopic quantum mechanics. The most promising near-term approach may be quantum-enhanced spacecraft that exploit specific quantum effects rather than achieving full macroscopic quantum coherence.

Critical Insight: The boundary between quantum and classical physics at macroscopic scales remains one of the deepest unsolved problems in physics. A BEC spaceship would require not just engineering breakthroughs, but fundamental advances in our understanding of quantum mechanics itself.

Practical Path Forward: Focus on metamaterials that exhibit quantum properties at increasing scales, while developing theoretical frameworks for macroscopic quantum systems. Even partial success could revolutionize both space technology and our understanding of quantum mechanics.

"Two possibilities exist: either we are alone in the Universe or we are not. Both are equally terrifying.” Arthur C. Clarke, author of "2001: A Space Odyssey."