Topics: Cryptography, Computer Science, Electrical Engineering, NIST

A new publication by cryptography experts at the National Institute of Standards and Technology (NIST) proposes the direction the technical agency will take to develop a more secure approach to encryption. This approach, called threshold cryptography, could overcome some of the limitations of conventional methods for protecting sensitive transactions and data.

The document, released today in a final version as NIST Roadmap Toward Criteria for Threshold Schemes for Cryptographic Primitives (NISTIR 8214A), offers an outline for developing a new way to implement the cryptographic tools that developers use to secure their systems. Its authors are inviting the cryptography community to collaborate with them on NIST’s budding Threshold Cryptography project, which in part seeks to ensure that threshold implementations are interoperable.

“We are kicking the threshold cryptography development effort into high gear,” said Apostol Vassilev, a NIST computer scientist. “Over the coming months, the Threshold Cryptography project will be engaging with the public to define criteria for this work. We want to get feedback from the community so we can consider a variety of threshold schemes and standardization paths.”

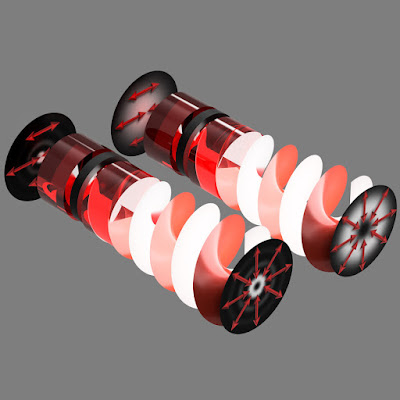

Threshold cryptography takes its name from the idea that individual keyholders cannot open a lock on their own, as is common in conventional cryptography. Instead, out of a group of keyholders, there must be a minimum number of them — a “threshold” number — working together to open the lock. In practice, this lock is an electronic cryptosystem that protects confidential information, such as a bank account number or an authorization to transfer money from that account.

NIST Kick-Starts ‘Threshold Cryptography’ Development Effort